Scheduling simulations and ghosts in the cluster 🪄

Exploring Virtual Kubelets in Kubernetes: Part 1

A series of diary entries where I hack away at the Virtual Kubelets, what they stand for and what they don’t stand for and whether they can stand in the first place considering they are ghosts (you’ll figure out what I mean by that soon).

Scheduling Simulations

17th October 2024

I had a very interesting discussion with Anjul Sahu yesterday about the state of Cloud Computing and Kubernetes generally and he was gracious enough to catch me up on what the current state of GPU computing in India looks like.

After being a little out of touch with the Kubernetes ecosystem, it was nice to get this little crash course back into the system because of it. Today, after that discussion I have decided to learn more about Virtual Kubelets and try them out. I also plan on continue on hacking on something else later today, so I am going to keep it short.

The latest KubeCon video I could find on Virtual Kubelets is the one below.

Notes on SimKube

Why Simulation ?

Simulating `workload scheduling` locally gives us a peek into how the workloads might perform on actual clusters or help us debug through replaying simulations without having to run the workloads on real clusters.

We also talk about simulating the control plane components of the cluster here (not the applications).

SimKube Architecture

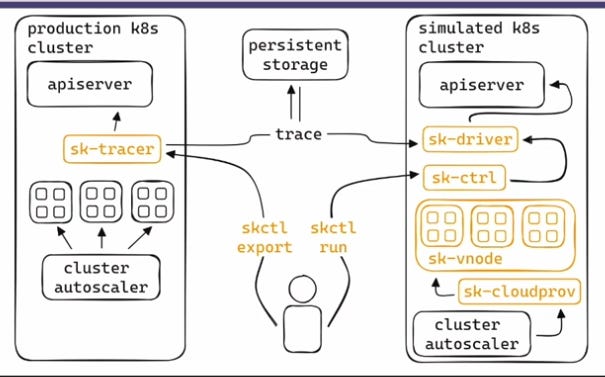

SimKube is the project that is being demoed in this talk and below is the high level architecture diagram for SimKube. The components marked in yellow are the 6 components discussed in this talk. SimKube is also written in Rust which is kind of amazing. Would definitely be nice to see more controllers being written in Rust ;)

User Interface to SimKube

skctl (CLI) - Helps talk to your production cluster for exporting data to persistent storage, and also helps replay that same data on the simulated cluster.

SimKube Components in the Production Cluster (eg: Azure, AWS EKS, GKE etc.)

sk-tracer (tracing service) - Runs in the production cluster. Collects data which is later stored in persistent storage.

When we want to use the data being collected by the tracer we can call `

skctl export`, which stores the data in some persistent storage from where we it can be used. This data would be used for creating simulations using `skctl run`. `skctl run`creates a Simulation CustomResource.

SimKube Components in Simulated Cluster (eg: kind, minikube etc.)

SimKube Simulation Control Plane

sk-ctrl (kubernetes controller) - listens for the Simulation CustomResource.

To use the collected data from the production cluster in the simulated cluster, we will have to run `

skctl run` which will Simulation CustomResource.

sk-driver (part of Simulation) - created by sk-ctrl when a Simulation CustomResource is created on the cluster.

installs a Mutating Admission Webhook and intercepts all the pods and moves them to the target sk-vnode.

SimKube Core Components (the heart of SimKube)

sk-vnode (Virtual Kubelet based Virtual/Fake Node) - acts as a Kubernetes node and listens for workloads being created but doesn’t actually create it. sk-vnode is that it, only supposed to simulate/mock behavior of resources in the nodes.

sk-cloudprov (Cloud Provider for Cluster Autoscaler) - the Cluster Autoscaler interfaces will all different cloud providers and sk-cloudprov is a cloud provider here in SimKube. sk-cloudprov scaled the nodes up and down if necessary for it’s simulations. Pod Deletion Cost is used to select nodes to terminate.

I was lucky enough to find drmorr aka David Morrison and his company’s blog here on Substack. if you like this tool and are intrigued by it and would like to hear more about the work Applied Computing Research Labs is doing, go ahead and subscribe to their blog below if you haven’t already.

At the end of the talk, David also spoke about possible future plans for the project, one of them stood out to me which was “intergrating with KWOK”. KWOK is Kubernetes WithOut Kubelet, and the point of the project is to be able to simulate nodes and clusters which falls inline with what SimKube is trying to do.

21st October 2024 (morning)

Ghosts of the Node 👻

It’s spooky how Virtual Kubelets work. If a kubelet is the soul of a node then a virtual kubelet is a kubelet without a node (or a real node). Let’s first look at a normal kubelet in an actual node to understand what they are.

Let’s check out a (real) Kubelet (for now)

Kubelet is a cute name. It sounds like it’s a “mini-me” version of a Kube(ernetes). But that’s definitely not the case. It’s a crucial part(s) of a Kubernetes cluster that manages the lifecycle of workloads on Nodes.

Let’s take a look at what the kubelet looks like. I am running Docker Desktop on my Mac and just going to create a kind cluster with the following command and peek into the container created for that cluster to see if I can find the kubelet service running.

kind create clusterLet’s list the container running for the kind cluster.

docker container list

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a035b26721b6 kindest/node:v1.29.2 "/usr/local/bin/entr…" 5 minutes ago Up 5 minutes 127.0.0.1:51437->6443/tcp kind-control-plane

Let’s get into the container with the following command

docker exec -it a035b26721b6 bash

root@kind-control-plane:/#And let’s see if we can find the kubelet service running here.

systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf, 11-kind.conf

Active: active (running) since Mon 2024-10-21 00:32:04 UTC; 14min ago

Docs: http://kubernetes.io/docs/

Process: 701 ExecStartPre=/bin/sh -euc if [ -f /sys/fs/cgroup/cgroup.controllers ]; then /kind/bin/create-kubelet-cgroup-v2.sh; fi (code=exited, status=0/SUCCESS)

Process: 709 ExecStartPre=/bin/sh -euc if [ ! -f /sys/fs/cgroup/cgroup.controllers ] && [ ! -d /sys/fs/cgroup/systemd/kubelet ]; then mkdir -p /sys/fs/cgroup/systemd/kubelet; fi (code=exited, status=0/SUCCESS)

Main PID: 710 (kubelet)

Tasks: 20 (limit: 9310)

Memory: 36.8M

CPU: 21.179s

CGroup: /kubelet.slice/kubelet.service

└─710 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime-endpoint=unix:///run/containerd/containerd.sock --node-ip=172.18.0.2 --node-labels= --pod-infra-container-image=registry.k8s.io/pause:3.9 --provider-id=kind://docker/kind/kind-control-plane --runtime-cgroups=/system.slice/containerd.serviceand yes we do. Under the above output there are logs of the kubelet which I haven’t pasted here. But the logs pertain to any of the pods and volumes which are being managed by the kubelet here. Let’s look more into the kubelet logs.

journalctl -u kubelet | moreJust going through the logs here, noting down anything that stands out.

Container Manager is created based on Node Config.

There is a topology manager here.

Node is synced with API Server.

Relevant Kubernetes objects are queried from the API server.

kubelet initialized iptables rules (I am saying this based on the log below, there seems to be contention online whether if the kubelet even has anything to do with the iptables, and some sources say it doesn’t but the logs might say one thing but the code says another.

kubelet_network_linux.go:50] "Initialized iptables rules." protocol="IPv4"After the resource management is setup, we move onto access control related setup with certificate setup and stuff where the node is being registered.

from here on out the Topology Admit Handler shows up quite a bit along with other things which seem to be part of the reconciliation loop.

As we take a break here having understood that there is a lot which goes into resource allocation and workload creation by the kubelet including communication and syncing with the API Server.

Virtual Kubelets: The ghastly equivalent of a Kubelet

(Here, ghastly is being used to refer to virtual kubelets as being ghost like)

Now that we have some idea of what a kubelet is, let’s focus on what happens when a kubelet doesn’t have a node to live in exactly. It won’t have the resources to handle but it will still contain the interfaces without any endpoints. These interfaces can abstract node capabilities through Container Runtime Interface (CRI), Container Storage Interface (CSI) and Container Network Interface (CNI) without being tied to actual hardware.

Virtual Kubelet is focused on providing a library that you can consume in your project to build a custom Kubernetes node agent.

Using Virtual Kubelets to use VMs as a Provider

Now, I want to be able to use virtual Kubelets to connect a virtual machine to a kind cluster without having to deal with Kubernetes federation. This can be great for use cases where I don’t need to

21st October 2024 (afternoon)

For the use case I have for the virtual kubelet, seems like Interlink is the perfect tool for it and the Target Providers does include “Remote/on-demand virtual machines with any container runtime”. So let’s try this out.